Setting up secure communication between two Exchange environments with TLS

In the example we will have two separate environments that have their own domains and are not connected in anyway / no trust in place. We have domaina.com and domainb.com. Each site has a a DC with ADCS installed (DCDomA and DCDomB) and an Exchange server that acts as CAS and Mailbox Server (ExchDomA and ExchDomB), we will firstly need to make sure both Exchange servers have the other domains CA root certificate installed by importing the root certificate from site A to B (so site B can validate server A) and from site B to site A (so site A can validate server B.)

So we must firstly install a CA on each environment (assuming that you will not be using a public CA) and import the root CA certificates of each environment into the other using the Certifcates snapin - place the root certificates under “Trusted Root Certificates” on the computer account.

*** Note before getting the following command to work with the GNU version of OpenSSL for windows I had to set an enviromental variable as follows:

set OPENSSL_CONF=C:\OpenSSL-Win32\bin\openssl.cfg

***

openssl req -new -newkey rsa:2048 -nodes -keyout server.key -out server.csr

Submit the request via Certificate Services, apprive the request using the Certificate Authority snapin and then go back to Certificate Services to download the certificate - although make sure that it is downloaded in BASE64 format otherwise openSSL won’t be able to read it! (i.e. The first line of the .cer file should start with: -----BEGIN CERTIFICATE-----) We will now need to create a .pfx (Personal Information Exchange) that will contain our private key and newly generated certificate:

openssl pkcs12 -export -out domain.name.pfx -inkey domain.name.key -in domain.name.crt

I strongly reccomend you stick with openssl for anything related to certificates, private keys etc. (you will save yourself a lot of hassle!)

Or if you don’t have access to a linux environment (or an openssl windows implementaton) you can use the pvk2pfx and cert2spc utilites provided by Microsoft in the “Microsoft Windows SDK for Windows 7 and .NET Framework” pack (http://www.microsoft.com/en-us/download/details.aspx?id=8279):

We must also firstly convert the private key that OpenSSL generated into a readable format for the pvk2pfx utility - we do this using a tool called pvktool (http://www.drh-consultancy.demon.co.uk/pvk.html):

pvk -in privatekey.key -out privatekey-ms.key -topvk

We also need to convert the .cer file to an spc file with cert2spc:

Cert2spc input.cer output.spc

And now create the pfx file with pvk2pfx:

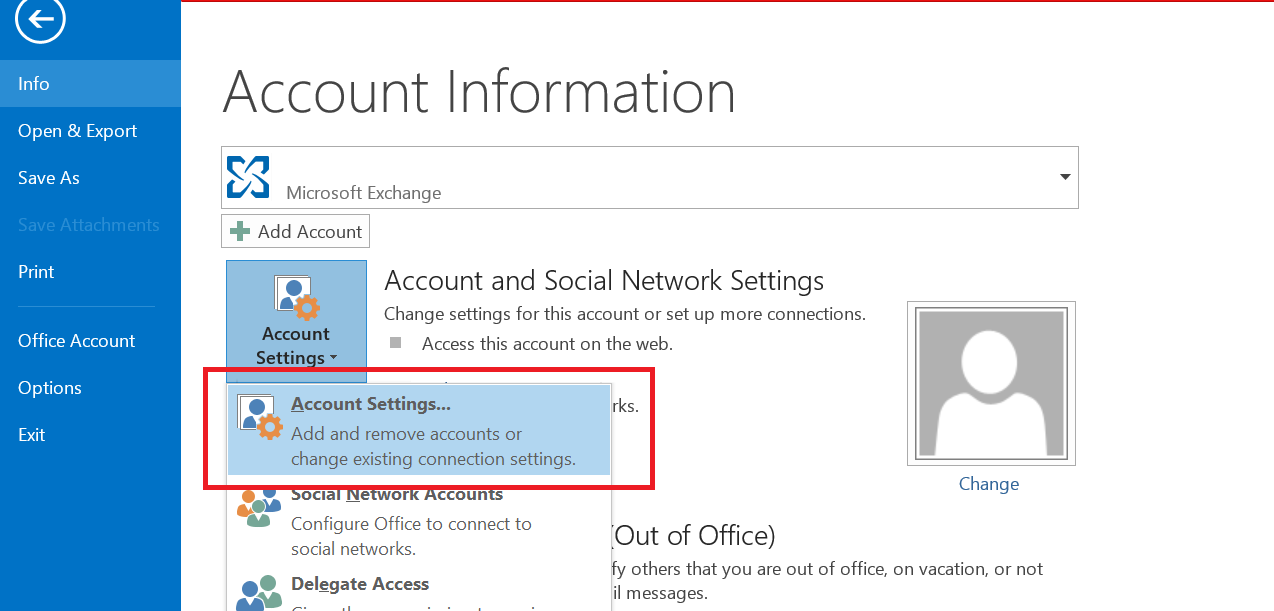

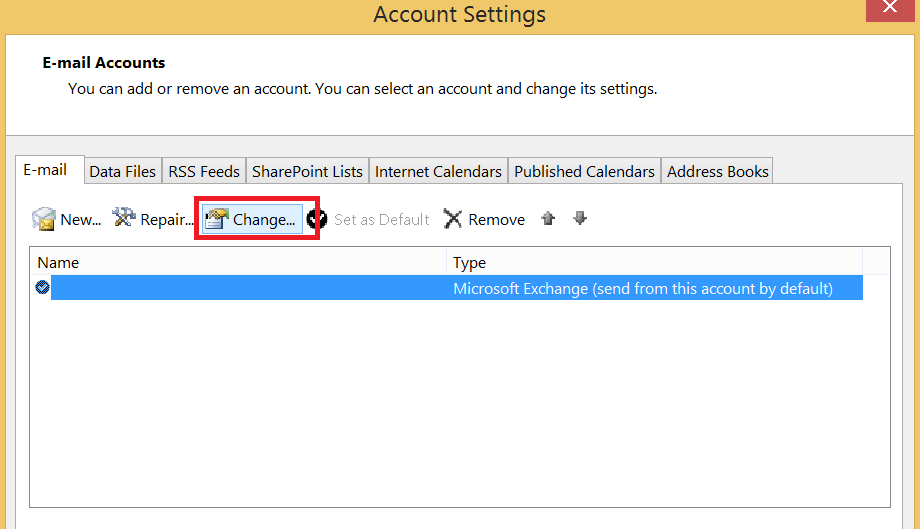

Again we will now import our pfx file for our exchange server: This can be accomplished via the ECP by going to Servers >> Certificates:

You must also double click on the certificate afterwords and make sure “SMTP” is ticked under the “Services” tab!

Or you can use the Exchange Shell:

Import-ExchangeCertificate -FileData ([Byte[]]$(Get-Content -Path C:\temp\certroot.pfx -Encoding Byte -ReadCount 0)) | Enable-ExchangeCertificate -Services SMTP

Once the certificates have been installed we will actually hook the two exchange servers on the two sites together.

We will focus on making sure sent mail works between the two sites first so to do this we will create a new Send Connector and choose the "Internal" connector type and define a smarthost (which will be the IP / hostname of your other Exchange / CAS server on the other site.)

We will select “external secured” - and finally adding an "Address Space" - which simply defines which domains should this send connector be used for.

Now we add a receive connector on the other server, selecting the "Custom" type, specifying the source server etc. We will then go into the new connectors properties and make sure we select “external secured” and "Anonymous Users" check boxes. It is also vital that there are DNS entries present for the partner exchange server it each environments!

Now we will check and make sure that the message has been sent successfully via querying the Queue Viewer and once we have confirmed receipt we can add our domain security by using TLS.

We need to configure the transport server - instructing the first exchange server to use TLS for recieve and send operatons to the other domain:

Set-TransportConfig -TLSSendDomainSecureList domaina.com // so anyone sending from domaina.com should use TLS

Set-TransportConfig –TLSReceiveDomainSecureList domainb.com // so anyone sending to domaina.com from domainb.com should use TLS.

And we will then do the same (but inverted) on domainb.com:

Set-TransportConfig -TLSSendDomainSecureList domainb.com

Set-TransportConfig –TLSReceiveDomainSecureList domaina.com

We need to modify the send connector we created for domainb.com on domaina.com: and make sure “Proxy through client access server” is checked.

We will now modify the recieve connector on domainb.com and untick “Externally secured” and tick “Transport Layer Security (TLS)” and “Enable domain security (mutual Auth TLS)” and finally make sure only “Partners”, “Anonymous Users” and “Exchange Servers” are ticked in the Permission Groups options:

We now need to perform the inverse operation on domainb.com now - I won’t cover this as I have listed all of the procedures above.

Finally if everything goes OK you should have a green tick next to your mail you send between the domains - clicking on this will inform the user that the mail item has been secured and has not been modified in any way :