We firstly query Exchange via the EMS to list all available databases:

Get-MailboxDatabaseOnce you have identified the database, we can double check the current mailbox location on the file system:

Get-MailboxDatabase -Identity "Mailbox Database 000001" | Format-List | Select-String -Patern "EdbFilePath" -SimpleMatchWe then dismount the database:

Dismount-Database "Mailbox Database 000001"Then we use the "Move-DatabasePath" cmdlet to move the location on:

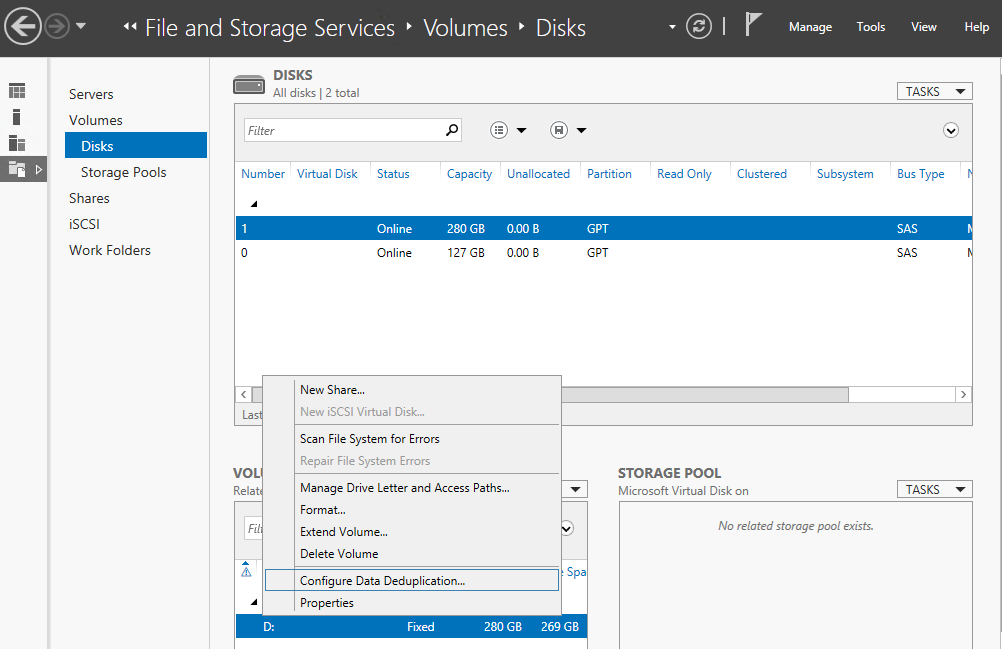

Move-DatabasePath "Mailbox Database 000001" -EdbFilePath "D:\Mailbox Database 000001\Mailbox Database 000001.edb" -LogFolderPath "D:\Mailbox Database 000001\"