Firstly confirm you have the appropriate hardware version (there are two for the BCM4360!)

lspci -vnn | grep Net

The 'wl' module only supports the '14e4:43a0' version.

The RPM fusion repository have kindly already packaged it up for us - so let's firstly add the repo:

sudo dnf install -y https://download1.rpmfusion.org/nonfree/fedora/rpmfusion-nonfree-release-26.noarch.rpm https://download1.rpmfusion.org/free/fedora/rpmfusion-free-release-26.noarch.rpm

sudo dnf install -y broadcom-wl kernel-devel

sudo akmods --force --kernel `uname -r` --akmod wl

sudo modprobe -a wl

Pages

▼

Monday, 31 July 2017

Thursday, 27 July 2017

curl: 8 Command Line Examples

curl is a great addition to any scripter's arsenal and I tend to use it quite a lot - so I thought I would demonstrate some of its features in this post.

Post data (as parameters) to url

curl -d _username="admin" -d password="<password>" https://127.0.0.1/login.php

Ensure curl follows redirects

curl -L google.com

Limit download bandwidth (2 MB per second)

curl --limit-rate 2M -O http://speedtest.newark.linode.com/100MB-newark.bin

Perform basic authentication

curl -u username:password https://localhost/restrictedarea

Enabling debug mode

curl -v https://localhost/file.zip

Using a HTTP proxy

curl -x proxy.squid.com:3128 https://google.com

Spoofing your user agent

curl -A "Spoofed User Agent" https://google.co.uk

Sending cookies along with a request

curl -b cookies.txt https://example.com

Wednesday, 26 July 2017

Windows Containers / Docker Networking: Inbound Communication

When working with Windows Containers I got a really bad headache trying to work out how to setup inbound communication to the container from external hosts.

To summerize my findings:

In order to allow inbound communication you will either need to use the '--expose' or '--expose' a long with '--ports' switch - each of them do slightly different things.

'--expose': When specifying this Docker will expose (make accessible) a port that is available to other containers only.

'--ports': When used in conjunction with '--expose' the port will also be available to the outside world.

Note: The above switches must be specified during the creation of a container - for example:

docker run -it --cpus 2 --memory 4G -p 80:80 --expose 80 --network=<network-id> --ip=10.0.0.254 --name <container-name> -h <container-hostname> microsoft/windowsservercore cmd.exe

If your container is on a 'transparent' (bridged) network you will not be able to specify the '-p' switch and instead if you will have to open up the relevant port on the Docker host a long with the '--expose' switch in order to make the container accessible to external hosts.

Tuesday, 25 July 2017

git: Removing sensitive information from a repository

While you can use the 'filter-branch' switch to effectively erase all trace of a file from a repository - there is a much quicker way to do this using BFG Repo-Cleaner.

Firstly grab an up to date copy of the repo with:

git pull https://github.com/user123/project.git master

Remove the file from the current branch:

git rm 'dirtyfile.txt'

Commit the changes to the local repo:

git commit -m "removal"

Push changes to the remote repo:

git push origin master

Download and execute BFG Repo-Cleaner:

cd /tmp

yum install jre-headless

wget http://repo1.maven.org/maven2/com/madgag/bfg/1.12.15/bfg-1.12.15.jar

cd /path/to/git/repo

java -jar /tmp/bfg-1.12.15.jar --delete-files dirtyfile.txt

Purge the reflog with:

git reflog expire --expire=now --all && git gc --prune=now --aggressive

Finally forcefully push changes to the remote repo:

git push origin master

Firstly grab an up to date copy of the repo with:

git pull https://github.com/user123/project.git master

Remove the file from the current branch:

git rm 'dirtyfile.txt'

Commit the changes to the local repo:

git commit -m "removal"

Push changes to the remote repo:

git push origin master

Download and execute BFG Repo-Cleaner:

cd /tmp

yum install jre-headless

wget http://repo1.maven.org/maven2/com/madgag/bfg/1.12.15/bfg-1.12.15.jar

cd /path/to/git/repo

java -jar /tmp/bfg-1.12.15.jar --delete-files dirtyfile.txt

Purge the reflog with:

git reflog expire --expire=now --all && git gc --prune=now --aggressive

Finally forcefully push changes to the remote repo:

git push origin master

Friday, 21 July 2017

Querying a PostgreSQL database

Firstly ensure your user has the adequate permissions to connect to the postgres server in pg_hba.conf.

For the purposes of this tutorial I will be using the postgres user:

sudo su - postgres

psql

\list

\connect snorby

or

psql snorby

For help we can issue:

\?

to list the databases:

\l

and to view the tables:

\dt

to get a description of the table we issue:

\d+ <table-name>

we can then query the table e.g.:

select * from <table> where <column-name> between '2017-07-19 15:31:09.444' and '2017-07-21 15:31:09.444';

and to quite:

\q

For the purposes of this tutorial I will be using the postgres user:

sudo su - postgres

psql

\list

\connect snorby

or

psql snorby

For help we can issue:

\?

to list the databases:

\l

and to view the tables:

\dt

to get a description of the table we issue:

\d+ <table-name>

we can then query the table e.g.:

select * from <table> where <column-name> between '2017-07-19 15:31:09.444' and '2017-07-21 15:31:09.444';

and to quite:

\q

Thursday, 20 July 2017

Resolved: wkhtmltopdf: cannot connect to X server

Unfortunately the latest versions of wkhtmltopdf are not headless and as a result you will need to download wkhtmltopdf version 0.12.2 in order to get it running in a CLI environment. I haven't had any luck with any other versions - but please let me know if there are any other versions confirmed working.

The other alternative is to fake an X server - however (personally) I prefer to avoid this approach.

You can download version 0.12.2 from here:

cd /tmp

wget https://github.com/wkhtmltopdf/wkhtmltopdf/releases/download/0.12.2/wkhtmltox-0.12.2_linux-centos7-amd64.rpm

rpm -i wkhtmltox-0.12.2_linux-centos7-amd64.rpm

rpm -ql wkhtmltox

/usr/local/bin/wkhtmltoimage

/usr/local/bin/wkhtmltopdf

/usr/local/include/wkhtmltox/dllbegin.inc

/usr/local/include/wkhtmltox/dllend.inc

/usr/local/include/wkhtmltox/image.h

/usr/local/include/wkhtmltox/pdf.h

/usr/local/lib/libwkhtmltox.so

/usr/local/lib/libwkhtmltox.so.0

/usr/local/lib/libwkhtmltox.so.0.12

/usr/local/lib/libwkhtmltox.so.0.12.2

/usr/local/share/man/man1/wkhtmltoimage.1.gz

/usr/local/share/man/man1/wkhtmltopdf.1.gz

The other alternative is to fake an X server - however (personally) I prefer to avoid this approach.

You can download version 0.12.2 from here:

cd /tmp

wget https://github.com/wkhtmltopdf/wkhtmltopdf/releases/download/0.12.2/wkhtmltox-0.12.2_linux-centos7-amd64.rpm

rpm -i wkhtmltox-0.12.2_linux-centos7-amd64.rpm

rpm -ql wkhtmltox

/usr/local/bin/wkhtmltoimage

/usr/local/bin/wkhtmltopdf

/usr/local/include/wkhtmltox/dllbegin.inc

/usr/local/include/wkhtmltox/dllend.inc

/usr/local/include/wkhtmltox/image.h

/usr/local/include/wkhtmltox/pdf.h

/usr/local/lib/libwkhtmltox.so

/usr/local/lib/libwkhtmltox.so.0

/usr/local/lib/libwkhtmltox.so.0.12

/usr/local/lib/libwkhtmltox.so.0.12.2

/usr/local/share/man/man1/wkhtmltoimage.1.gz

/usr/local/share/man/man1/wkhtmltopdf.1.gz

Tuesday, 18 July 2017

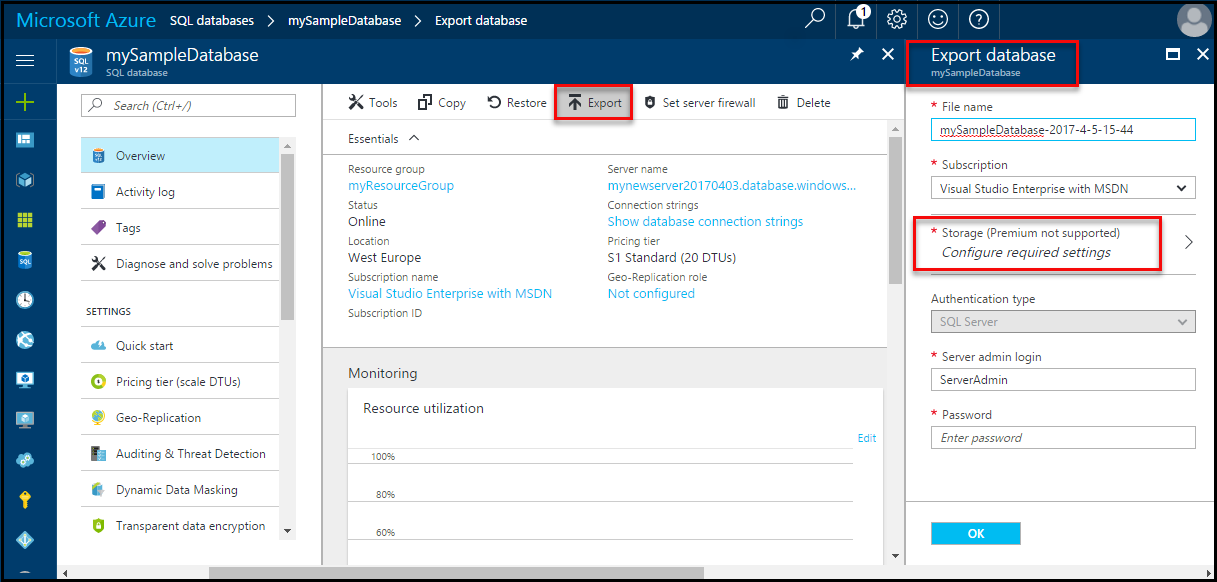

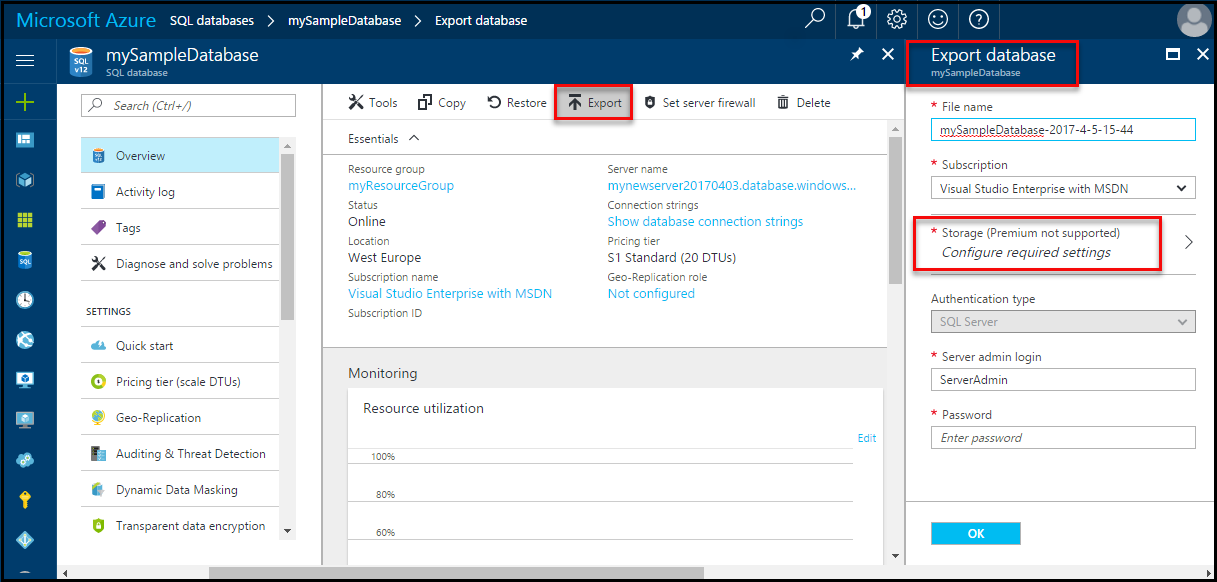

Exporting MSSQL Databases (schema and data) from Azure

Because Microsoft have disabled the ability to perform backups / exports of MSSQL databases from Azure directly from the SQL Management Studio (why?!) we now have to perform this from the Azure Portal.

A new format introduced as a 'bacpac' file allows you to store both the database schema and data within a single (compressed) file.

Open up the Resource Group in the Azure Portal, select the relevant database >> Overview >> and then select the 'Export' button:

Unfortunately these can't be downloaded directly and will need to be placed into a storage account.

If you wish to import this into another Azure Tenant or an on-premise SQL server you'll need to download the Azure Storage Explorer to download the backup.

A new format introduced as a 'bacpac' file allows you to store both the database schema and data within a single (compressed) file.

Open up the Resource Group in the Azure Portal, select the relevant database >> Overview >> and then select the 'Export' button:

Unfortunately these can't be downloaded directly and will need to be placed into a storage account.

If you wish to import this into another Azure Tenant or an on-premise SQL server you'll need to download the Azure Storage Explorer to download the backup.

Friday, 14 July 2017

A crash course on Bash / Shell scripting concepts

if statement

if [[ $1 == "123" ]]

then echo "Argument 1 equals to 123"

else

echo "Argument 1 does not equal to 123"

fi

inverted if statement

if ! [[ $1 == "123" ]]

then echo "Argument 1 does not equal to 123"

fi

regular expression (checking for number)

regex='^[0-9]+$'

if [[ $num =~ regex ]]

then echo "This is a valid number!"

fi

To be continued...

if [[ $1 == "123" ]]

then echo "Argument 1 equals to 123"

else

echo "Argument 1 does not equal to 123"

fi

inverted if statement

if ! [[ $1 == "123" ]]

then echo "Argument 1 does not equal to 123"

fi

regular expression (checking for number)

regex='^[0-9]+$'

if [[ $num =~ regex ]]

then echo "This is a valid number!"

fi

while loop

NUMBER=1

while [[ $NUMBER -le "20" ]]

do echo "The number ($NUMBER) is less than 20"

NUMBER=$((NUMBER + 1))

done

awk (separate by char)

LINE=this,is,a,test

echo "$LINE" | awk -F ',' '{print The first element is $1}'

functions

MyFunction testing123

function MyFunction {

echo $1

}

read a file

while read -r LINE

do echo "Line: $LINE"

done < /tmp/inputfile.txt

awk (separate by char)

LINE=this,is,a,test

echo "$LINE" | awk -F ',' '{print The first element is $1}'

functions

MyFunction testing123

function MyFunction {

echo $1

}

read a file

while read -r LINE

do echo "Line: $LINE"

done < /tmp/inputfile.txt

case statement

case $1 in

[1-2]*) echo "Number is between 1 and 2"

;;

[3-4]*) echo "Number is between 3 and 4"

;;

5) echo "Number is 5"

;;

6) echo "Number is 6"

;;

*) echo "Something else..."

;;

esac

for loop

for arg in $*

do echo $arg

done

arrays

myarray=(20 "test 123" 50)

myarray+=("new element")

for element in {$myarray[@]}

do echo $element

done

arrays

myarray=(20 "test 123" 50)

myarray+=("new element")

for element in {$myarray[@]}

do echo $element

done

getting user input

echo Please enter how your age:

read userinput

echo You have entered $userinput!

executing commands within bash

DATE1=`date +%Y%m%d`

echo Date1 is: $DATE1

DATE2=$(date +%Y%m%d) # preferred way

echo Date2 is: $DATE2

To be continued...

Setting up Octopus Tentacle on Windows Server 2012/2016 Core

For this tutorial I will be setting up Octopus Tentacle in a container running Server 2016 Core.

Let's firstly create our container with:

docker run -it --cpus 2 --memory 4G --network=<network-id> --name windowscore -h <your-hostname> microsoft/windowsservercore cmd.exe

Ensure that your computer name is correct and setup in DNS - so both Octopus and the server running the Tentacle can communicate with each other.

and for the purposes of this tutorial we will use a static IP and also join to to our domain with Powershell:

Get-NetIPInterface | FL # grab the relevant interface ID from here

Ensure DHCP is disabled on the NIC:

Set-NetIPInterface -InterfaceIndex 22 -DHCP Disabled

and assign a static IP address with:

New-NetIPAddress -InterfaceIndex 22 -IPAddress 10.11.12.13 -PrefixLength 24

Remove-NetRoute -InterfaceIndex 22 -DestinationPrefix 0.0.0.0/0

New-NetRoute -DestinationPrefix 0.0.0.0/0 -InterfaceIndex 22 -NextHop 10.11.12.1

and then DNS servers:

Set-DnsClientServerAddress -InterfaceIndex 22 -ServerAddresses {10.1.2.3, 10.3.2.1}

and join to the domain with:

$domain = "myDomain"

$password = "myPassword!" | ConvertTo-SecureString -asPlainText -Force

$username = "$domain\myUserAccount"

$credential = New-Object System.Management.Automation.PSCredential($username,$password)

Add-Computer -DomainName $domain -Credential $credential

and finally reboot the host:

shutdown -t 0

docker start <your-container>

docker attach <your-container>

Let's firstly get into powershell and download the latest version of Octopus tentacle:

powershell.exe

mkdir C:\temp

cd C:\temp

Start-BitsTransfer -Source https://download.octopusdeploy.com/octopus/Octopus.Tentacle.3.15.1-x64.msi -Destination C:\temp

and then perform a quite installation of it:

msiexec /i Octopus.Tentacle.3.15.1-x64.msi /quiet

We now need to configure the tentacle - so let's firstly create a new instance:

exit # exit out of powershell as service installation fails otherwise

cd "C:\Program Files\Octopus Deploy\Tentacle"

tentacle.exe create-instance --instance "Tentacle" --config "C:\Octopus\Tentacle.config" --console

and generate a new certificate for it:

tentacle.exe new-certificate --instance "Tentacle" --if-blank --console

tentacle.exe configure --instance "Tentacle" --reset-trust --console

and create the listener:

Tentacle.exe configure --instance "Tentacle" --home "C:\Octopus" --app "C:\Octopus\Applications" --port "10933" --console

add your Octopus Deploy server footprint in:

Tentacle.exe configure --instance "Tentacle" --trust "YOUR_OCTOPUS_THUMBPRINT" --console

Sort out the firewall exception:

"netsh" advfirewall firewall add rule "name=Octopus Deploy Tentacle" dir=in action=allow protocol=TCP localport=10933

Finally register the tentacle with the Octopus Deploy server:

Tentacle.exe register-with --instance "Tentacle" --server "http://YOUR_OCTOPUS" --apiKey="API-YOUR_API_KEY" --role "web-server" --environment "staging" --comms-style TentaclePassive --console

Note: You will likely need to generate an API key - this can be generated from the Octopus web interface, clicking on your username >> Profile and then hitting the API tab.

and install / start the service with:

Tentacle.exe service --instance "Tentacle" --install --start --console

We can now verify this with:

sc query "OctopusDeploy Tentacle"

Let's firstly create our container with:

docker run -it --cpus 2 --memory 4G --network=<network-id> --name windowscore -h <your-hostname> microsoft/windowsservercore cmd.exe

Ensure that your computer name is correct and setup in DNS - so both Octopus and the server running the Tentacle can communicate with each other.

and for the purposes of this tutorial we will use a static IP and also join to to our domain with Powershell:

Get-NetIPInterface | FL # grab the relevant interface ID from here

Ensure DHCP is disabled on the NIC:

Set-NetIPInterface -InterfaceIndex 22 -DHCP Disabled

and assign a static IP address with:

New-NetIPAddress -InterfaceIndex 22 -IPAddress 10.11.12.13 -PrefixLength 24

Remove-NetRoute -InterfaceIndex 22 -DestinationPrefix 0.0.0.0/0

New-NetRoute -DestinationPrefix 0.0.0.0/0 -InterfaceIndex 22 -NextHop 10.11.12.1

and then DNS servers:

Set-DnsClientServerAddress -InterfaceIndex 22 -ServerAddresses {10.1.2.3, 10.3.2.1}

and join to the domain with:

$domain = "myDomain"

$password = "myPassword!" | ConvertTo-SecureString -asPlainText -Force

$username = "$domain\myUserAccount"

$credential = New-Object System.Management.Automation.PSCredential($username,$password)

Add-Computer -DomainName $domain -Credential $credential

and finally reboot the host:

shutdown -t 0

docker start <your-container>

docker attach <your-container>

Let's firstly get into powershell and download the latest version of Octopus tentacle:

powershell.exe

mkdir C:\temp

cd C:\temp

Start-BitsTransfer -Source https://download.octopusdeploy.com/octopus/Octopus.Tentacle.3.15.1-x64.msi -Destination C:\temp

and then perform a quite installation of it:

msiexec /i Octopus.Tentacle.3.15.1-x64.msi /quiet

We now need to configure the tentacle - so let's firstly create a new instance:

exit # exit out of powershell as service installation fails otherwise

cd "C:\Program Files\Octopus Deploy\Tentacle"

tentacle.exe create-instance --instance "Tentacle" --config "C:\Octopus\Tentacle.config" --console

and generate a new certificate for it:

tentacle.exe new-certificate --instance "Tentacle" --if-blank --console

tentacle.exe configure --instance "Tentacle" --reset-trust --console

and create the listener:

Tentacle.exe configure --instance "Tentacle" --home "C:\Octopus" --app "C:\Octopus\Applications" --port "10933" --console

add your Octopus Deploy server footprint in:

Tentacle.exe configure --instance "Tentacle" --trust "YOUR_OCTOPUS_THUMBPRINT" --console

Sort out the firewall exception:

"netsh" advfirewall firewall add rule "name=Octopus Deploy Tentacle" dir=in action=allow protocol=TCP localport=10933

Finally register the tentacle with the Octopus Deploy server:

Tentacle.exe register-with --instance "Tentacle" --server "http://YOUR_OCTOPUS" --apiKey="API-YOUR_API_KEY" --role "web-server" --environment "staging" --comms-style TentaclePassive --console

Note: You will likely need to generate an API key - this can be generated from the Octopus web interface, clicking on your username >> Profile and then hitting the API tab.

and install / start the service with:

Tentacle.exe service --instance "Tentacle" --install --start --console

We can now verify this with:

sc query "OctopusDeploy Tentacle"

Thursday, 13 July 2017

Script to remove bad characters from a set of files

The need for this script was prompted by a series of files being uploaded to Sharepoint which had special characters within their filenames such as an astrix or tilde.

Although there are many ways to achieve this I chose for a simplistic approach using cp and sed.

We can use the sed substitute function to replace any bad characters - we have the following directory we wish to 'cleanse':

ls /tmp/test

drwxrwxr-x. 2 limited limited 120 Jul 13 13:45 .

drwxrwxrwt. 40 root root 1280 Jul 13 13:43 ..

-rw-rw-r--. 1 limited limited 0 Jul 13 13:45 'fran^k.txt'

-rw-rw-r--. 1 limited limited 0 Jul 13 13:44 @note.txt

-rw-rw-r--. 1 limited limited 0 Jul 13 13:44 'rubbi'\''sh.txt'

-rw-rw-r--. 1 limited limited 0 Jul 13 13:43 'test`.txt'

#!/bin/bash

Although there are many ways to achieve this I chose for a simplistic approach using cp and sed.

We can use the sed substitute function to replace any bad characters - we have the following directory we wish to 'cleanse':

ls /tmp/test

drwxrwxr-x. 2 limited limited 120 Jul 13 13:45 .

drwxrwxrwt. 40 root root 1280 Jul 13 13:43 ..

-rw-rw-r--. 1 limited limited 0 Jul 13 13:45 'fran^k.txt'

-rw-rw-r--. 1 limited limited 0 Jul 13 13:44 @note.txt

-rw-rw-r--. 1 limited limited 0 Jul 13 13:44 'rubbi'\''sh.txt'

-rw-rw-r--. 1 limited limited 0 Jul 13 13:43 'test`.txt'

We can run a quick test to see what the results would look like just piping the result out to stdout:

#!/bin/bash

cd /tmp/test

FileList=*

for file in $FileList;

do (echo $file | sed s/[\'\`^@]/_/g );

done;

Note: The 'g' option instructs sed to substitute all matches on each line.

Or an even better approach (adapted from here):

#!/bin/bash

cd /tmp/test

FileList=*

for file in $FileList;

do (echo $file | sed s/[^a-zA-Z0-9._-]/_/g );

done;

The addition of the caret (^) usually means match at the beginning of the line in a normal regex - however in the context where the brace ([ ]) operators are used in inverse the operation - so anything that does not match the specified is replaced with the underscore character.

If we are happy with the results we can get cp to copy the files into our 'sanitised directory':

#!/bin/bash

cd /tmp/test

FileList=*

OutputDirectory=/tmp/output/

for file in $FileList;

do cp $file $OutputDirectory$(printf $file | sed s/[^a-zA-Z0-9._-]/_/g);

done;

There are some limitations to this however - for example the above script will not work with sub directories properly - so in order to cater for this we need to make a few changes:

#!/bin/bash

if [ $# -eq 0 ]

then

echo "Usage: stripbadchars.sh <source-directory> <output-directory>"

exit

fi

then

echo "Usage: stripbadchars.sh <source-directory> <output-directory>"

exit

fi

FileList=`find $1 | tail -n +2` # we need to exclude the first line (as it's a directory path)

OutputDirectory=$2

for file in $FileList

do BASENAME=$(basename $file)

BASEPATH=$(dirname $file)

SANITISEDFNAME=`echo $BASENAME | sed s/[^a-zA-Z0-9._-]/_/g`

# cp won't create the directory structure for us - so we need to do it ourself

mkdir -p $OutputDirectory/$BASEPATH

echo "Writing file: $OutputDirectory$BASEPATH/$SANITISEDFNAME"

cp -R $file $OutputDirectory$BASEPATH/$SANITISEDFNAME

done

Note: Simple bash variables will not list all files recursively - so instead we can use the 'find' command to do this for us.

vi stripbadchars.sh

chmod 700 stripbadchars.sh

and execute with:

./stripbadchars.sh /tmp/test /tmp/output

Note: Simple bash variables will not list all files recursively - so instead we can use the 'find' command to do this for us.

vi stripbadchars.sh

chmod 700 stripbadchars.sh

and execute with:

./stripbadchars.sh /tmp/test /tmp/output

Configuring NICs on Windows Server 2016 from the command line / Powershell

It seems that in Server 2016 they have removed some of the functionality in some older utilities such as netsh, netdom etc.

So in order to configure IP addresses from the command line it looks like we should put our trust solely in Powershell (*cringes*.)

In order to set a static IP address there are a few commands we need to run - firstly disabling the DHCP on the relevant NIC:

Get-NetIPInterface | FL # grab the relevant interface ID from here

Set-NetIPInterface -InterfaceIndex 22 -DHCP Disabled

*Note: We can also use the 'InterfaceAlias' switch to provide the NIC's name - however personally I prefer using the Index.

In my experience disabling DHCP on the interface does not always wipe any existing configuration - so we should remove any exsiting IP address's with:

Remove-NetIPAddress -InterfaceIndex 22 -IPAddress 10.11.12.13 -PrefixLength 24

and any default route associated with the interface:

Remove-NetRoute -InterfaceIndex 22 -DestinationPrefix 0.0.0.0/0

And then assign the IP address, subnet mask and default route (if any) with:

New-NetIPAddress -InterfaceIndex 22 -IPAddress 12.13.14.15 -PrefixLength 24

New-NetRoute -DestinationPrefix 0.0.0.0/0 -InterfaceIndex 22 -NextHop 12.13.14.1

and finally any DNS servers:

Set-DnsClientServerAddress -InterfaceIndex 22 -ServerAddresses {8.8.8.8,8.8.4.4}

So in order to configure IP addresses from the command line it looks like we should put our trust solely in Powershell (*cringes*.)

In order to set a static IP address there are a few commands we need to run - firstly disabling the DHCP on the relevant NIC:

Get-NetIPInterface | FL # grab the relevant interface ID from here

Set-NetIPInterface -InterfaceIndex 22 -DHCP Disabled

*Note: We can also use the 'InterfaceAlias' switch to provide the NIC's name - however personally I prefer using the Index.

In my experience disabling DHCP on the interface does not always wipe any existing configuration - so we should remove any exsiting IP address's with:

Remove-NetIPAddress -InterfaceIndex 22 -IPAddress 10.11.12.13 -PrefixLength 24

and any default route associated with the interface:

Remove-NetRoute -InterfaceIndex 22 -DestinationPrefix 0.0.0.0/0

And then assign the IP address, subnet mask and default route (if any) with:

New-NetIPAddress -InterfaceIndex 22 -IPAddress 12.13.14.15 -PrefixLength 24

New-NetRoute -DestinationPrefix 0.0.0.0/0 -InterfaceIndex 22 -NextHop 12.13.14.1

and finally any DNS servers:

Set-DnsClientServerAddress -InterfaceIndex 22 -ServerAddresses {8.8.8.8,8.8.4.4}

Wednesday, 12 July 2017

Windows Containers / Docker - Creating a 'bridged' or 'transparent' network

By default Windows Containers (or Docker on Server 2016) uses WinNAT to provide NAT functionality to containers - however in some cases you will likely want to run a container in bridged mode - i.e. provide direct network access to the container.

We can do this fairly easily with:

docker network create -d transparent -o com.docker.network.windowsshim.interface="Ethernet0" TransparentNet

Confirm with:

docker network ls

We can also check the details of the network with the 'inspect' switch for example:

docker network inspect <network-id>

Static IPs

If you wish to use a static IP on the container you need to ensure the '--subnet' switch is included when creating the network - for example:

docker network create -d transparent --subnet=10.11.12.0/24 --gateway=10.11.12.1 -o com.docker.network.windowsshim.interface="Ethernet0" TransparentNet

We can then launch new containers using the '--network' switch - for example:

docker run --network=<NETWORK-ID>

Unfortunately the Docker team have not yet provided us with the ability to change the network mode on existing containers - so you will have to re-create it.

We can do this fairly easily with:

docker network create -d transparent -o com.docker.network.windowsshim.interface="Ethernet0" TransparentNet

Confirm with:

docker network ls

We can also check the details of the network with the 'inspect' switch for example:

docker network inspect <network-id>

Static IPs

If you wish to use a static IP on the container you need to ensure the '--subnet' switch is included when creating the network - for example:

docker network create -d transparent --subnet=10.11.12.0/24 --gateway=10.11.12.1 -o com.docker.network.windowsshim.interface="Ethernet0" TransparentNet

We can then launch new containers using the '--network' switch - for example:

docker run --network=<NETWORK-ID>

Unfortunately the Docker team have not yet provided us with the ability to change the network mode on existing containers - so you will have to re-create it.

Snippet: Copying only modified files within X days

The following spinet allows you to copy only modified within the last 7 days (from the current date) to a predefined destination.

find /var/log -d -mtime -7 -exec cp {} /home/user/modified_logs \;

The -mtime command specifies the time (in days) of how far you wish to span back.

You can also check for files that have been accessed with the '-atime' switch and similarly the creation time with 'ctime'.

The '-exec' switch allows us to execute a custom command (in this case cp) and transplant the output of the find command into the custom cp command (i.e. {})

Finally we need to use end the exec portion with '\;'

find /var/log -d -mtime -7 -exec cp {} /home/user/modified_logs \;

The -mtime command specifies the time (in days) of how far you wish to span back.

You can also check for files that have been accessed with the '-atime' switch and similarly the creation time with 'ctime'.

The '-exec' switch allows us to execute a custom command (in this case cp) and transplant the output of the find command into the custom cp command (i.e. {})

Finally we need to use end the exec portion with '\;'

Sunday, 9 July 2017

CentOS / RHEL: Enabling automatic updates of critical security patches

While I certainty wouldn't recommend enabling automatic updates (even general security updates) on a production server I would however (in most cases) recommend enabling automatic updates for critical security patches.

We can do this with the yum-cron tool - which (as suggested) creates a cronjob to perform the updates:

sudo -y install yum-cron

We can then configure yum-cron - ensuring it only applies critical security updates:

vi /etc/yum/yum-cron.conf

and setup a mail host and destination - while ensuring that the update_cmd is set accordingly:

update_cmd minimal-security-severity:Critical

start the service:

sudo systemctl start yum-cron

and ensure it starts at boot:

sudo systemctl enable yum-cron

By default yum-cron runs on a daily basis - however this can easily be changed or the command integrated into your own cron job:

cat /etc/cron.daily/0yum-daily.cron

#!/bin/bash

# Only run if this flag is set. The flag is created by the yum-cron init

# script when the service is started -- this allows one to use chkconfig and

# the standard "service stop|start" commands to enable or disable yum-cron.

if [[ ! -f /var/lock/subsys/yum-cron ]]; then

exit 0

fi

# Action!

exec /usr/sbin/yum-cron

We can do this with the yum-cron tool - which (as suggested) creates a cronjob to perform the updates:

sudo -y install yum-cron

We can then configure yum-cron - ensuring it only applies critical security updates:

vi /etc/yum/yum-cron.conf

and setup a mail host and destination - while ensuring that the update_cmd is set accordingly:

update_cmd minimal-security-severity:Critical

start the service:

sudo systemctl start yum-cron

and ensure it starts at boot:

sudo systemctl enable yum-cron

By default yum-cron runs on a daily basis - however this can easily be changed or the command integrated into your own cron job:

cat /etc/cron.daily/0yum-daily.cron

#!/bin/bash

# Only run if this flag is set. The flag is created by the yum-cron init

# script when the service is started -- this allows one to use chkconfig and

# the standard "service stop|start" commands to enable or disable yum-cron.

if [[ ! -f /var/lock/subsys/yum-cron ]]; then

exit 0

fi

# Action!

exec /usr/sbin/yum-cron

Friday, 7 July 2017

Linux: Benchmarking disk I/O and determining block size

A quick command that can be used to benchmark the disk write speed:

time sh -c "dd if=/dev/zero of=ddfile bs=8k count=250000 && sync"; rm ddfile

and with a slightly larger block size:

time sh -c "dd if=/dev/zero of=ddfile bs=16M count=10 && sync"; rm ddfile

Note: Finding the appropriate block size when working with dd will be dependant on the hardware - the block size determines how how data is kept in memory during the copy - so you can imagine that if the block size is 2G in size you would need to ensure that you have at least 2GB of free RAM. I like to start with a BS of 64k and compare it with something like 16M.

We can also test read speed suing hdparm:

hdparm -t /dev/sda1 # performs buffered test (more accurate)

hdparm -T /dev/sda1 # performs cached test

time sh -c "dd if=/dev/zero of=ddfile bs=8k count=250000 && sync"; rm ddfile

and with a slightly larger block size:

time sh -c "dd if=/dev/zero of=ddfile bs=16M count=10 && sync"; rm ddfile

Note: Finding the appropriate block size when working with dd will be dependant on the hardware - the block size determines how how data is kept in memory during the copy - so you can imagine that if the block size is 2G in size you would need to ensure that you have at least 2GB of free RAM. I like to start with a BS of 64k and compare it with something like 16M.

We can also test read speed suing hdparm:

hdparm -t /dev/sda1 # performs buffered test (more accurate)

hdparm -T /dev/sda1 # performs cached test

RAM, CPU and I/O Throttling with Windows Server Containers / Docker

CPU

Docker provides several options for limiting CPU with containers - one of the more common switches is '--cpus' - which allows you to limit the number of CPU's a container can use - for example if I wanted to ensure a container only used 1 and a half processors I could issue:

docker run -it --name windowscorecapped --cpus 1.5 microsoft/windowsservercore cmd.exe

There is also a switch called '--cpu-shares' which allows you to delegate which containers would get priority (weighted) access to CPU cycles during (and only during) contention - the default value is 1024 - which can be set to either lower or higher:

docker run -it --name windowscorecapped --cpus 1.5 --cpu-shares 1500 microsoft/windowsservercore cmd.exe

RAM

Docker on Windows uses a light-weight HyperV VM to create the containers and as a result has the default 2GB memory limit - we can increase this using the -m switch e.g.:

docker run -it --name windowscorecapped --memory 4G microsoft/windowsservercore cmd.exe

Disk I/O

Docker provides a few options to control I/O throughput - one of the more useful options is the '--blkio-weight' switch which can be found under the 'docker run' command.

Docker provides several options for limiting CPU with containers - one of the more common switches is '--cpus' - which allows you to limit the number of CPU's a container can use - for example if I wanted to ensure a container only used 1 and a half processors I could issue:

docker run -it --name windowscorecapped --cpus 1.5 microsoft/windowsservercore cmd.exe

There is also a switch called '--cpu-shares' which allows you to delegate which containers would get priority (weighted) access to CPU cycles during (and only during) contention - the default value is 1024 - which can be set to either lower or higher:

docker run -it --name windowscorecapped --cpus 1.5 --cpu-shares 1500 microsoft/windowsservercore cmd.exe

RAM

Docker on Windows uses a light-weight HyperV VM to create the containers and as a result has the default 2GB memory limit - we can increase this using the -m switch e.g.:

docker run -it --name windowscorecapped --memory 4G microsoft/windowsservercore cmd.exe

Disk I/O

Docker provides a few options to control I/O throughput - one of the more useful options is the '--blkio-weight' switch which can be found under the 'docker run' command.

--blkio-weight takes an integer between 10 and 1000 - although by default is set to 0 - which disables it.

Unfortunately at this time it appears like the Windows implementation of Docker does not support this feature:

docker: Error response from daemon: invalid option: Windows does not support BlkioWeight.

So instead for Windows we have 'io-maxiops' and 'io-maxbandwidth'.

Neither are as good as the 'blkio-weight' - the 'io-maxiops' is not great because what size of data are you basing the iops calculation on - for example a read or write block size of 8KB might get a very different result then that of a 64KB block.

There are numerous tools that can help simulate disk i/o for different situations - for example databases read / writes which will typically be small but many. One of these tools can be obtained from Microsoft - called diskspd.

Let's firstly create a normal (unthrottled) container and perform some benchmarks:

docker run -it --name windowscore microsoft/windowsservercore cmd.exe

If you are on Windows Server Core you can download it using PowerShell e.g.:

Invoke-WebRequest -Uri "http://url.com/disk.zip" -OutFile "C:\Disk.zip"

and since Microsoft didn't bother to include a command line unzip utility (not even in Windows 10!) we have to use PowerShell to unzip it:

powershell.exe -nologo -noprofile -command "& { Add-Type -A 'System.IO.Compression.FileSystem'; [IO.Compression.ZipFile]::ExtractToDirectory('C:\Disk.zip', 'disk'); }"

We can then run it like follows (note: This config is aimed at a SQL server - you should change the block size etc. dependant on the use of the server / targeted application):

diskspd –b8K –d30 –o4 –t8 –h –r –w25 –L –Z1G –c5G C:\iotest.dat

Read IO

thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file

-----------------------------------------------------------------------------------------------------

0 | 55533568 | 6779 | 1.77 | 225.96 | 8.442 | 35.928 | C:\iotest.dat (5120MB)

1 | 64618496 | 7888 | 2.05 | 262.93 | 7.412 | 34.584 | C:\iotest.dat (5120MB)

2 | 53665792 | 6551 | 1.71 | 218.36 | 7.792 | 31.946 | C:\iotest.dat (5120MB)

3 | 63733760 | 7780 | 2.03 | 259.33 | 6.919 | 30.835 | C:\iotest.dat (5120MB)

4 | 32374784 | 3952 | 1.03 | 131.73 | 14.018 | 44.543 | C:\iotest.dat (5120MB)

5 | 62357504 | 7612 | 1.98 | 253.73 | 7.571 | 33.976 | C:\iotest.dat (5120MB)

6 | 53354496 | 6513 | 1.70 | 217.10 | 8.352 | 35.517 | C:\iotest.dat (5120MB)

7 | 65290240 | 7970 | 2.08 | 265.66 | 7.016 | 33.599 | C:\iotest.dat (5120MB)

-----------------------------------------------------------------------------------------------------

total: 450928640 | 55045 | 14.33 | 1834.80 | 8.064 | 34.699

Write IO

thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file

-----------------------------------------------------------------------------------------------------

0 | 18046976 | 2203 | 0.57 | 73.43 | 28.272 | 56.258 | C:\iotest.dat (5120MB)

1 | 21463040 | 2620 | 0.68 | 87.33 | 23.235 | 49.989 | C:\iotest.dat (5120MB)

2 | 17686528 | 2159 | 0.56 | 71.97 | 31.750 | 64.613 | C:\iotest.dat (5120MB)

3 | 20946944 | 2557 | 0.67 | 85.23 | 25.756 | 56.673 | C:\iotest.dat (5120MB)

4 | 11157504 | 1362 | 0.35 | 45.40 | 47.233 | 72.614 | C:\iotest.dat (5120MB)

5 | 21438464 | 2617 | 0.68 | 87.23 | 23.498 | 50.224 | C:\iotest.dat (5120MB)

6 | 17784832 | 2171 | 0.57 | 72.37 | 30.173 | 59.930 | C:\iotest.dat (5120MB)

7 | 21282816 | 2598 | 0.68 | 86.60 | 24.409 | 55.087 | C:\iotest.dat (5120MB)

-----------------------------------------------------------------------------------------------------

and then create a new container - this time capping the max i/o:

docker run -it --name windowscorecapped --io-maxiops 100 microsoft/windowsservercore cmd.exe

and again download and unzip diskspd and run the benchmark again with:

diskspd –b8K –d30 –o4 –t8 –h –r –w25 –L –Z1G –c5G C:\iotest.dat

Read IO

thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file

-----------------------------------------------------------------------------------------------------

0 | 5390336 | 658 | 0.17 | 21.93 | 13.552 | 114.205 | C:\iotest.dat (5120MB)

1 | 5849088 | 714 | 0.19 | 23.80 | 12.530 | 109.649 | C:\iotest.dat (5120MB)

2 | 5619712 | 686 | 0.18 | 22.87 | 10.098 | 98.537 | C:\iotest.dat (5120MB)

3 | 4931584 | 602 | 0.16 | 20.07 | 22.938 | 147.832 | C:\iotest.dat (5120MB)

4 | 5758976 | 703 | 0.18 | 23.43 | 15.490 | 122.039 | C:\iotest.dat (5120MB)

5 | 5611520 | 685 | 0.18 | 22.83 | 10.078 | 98.006 | C:\iotest.dat (5120MB)

6 | 5283840 | 645 | 0.17 | 21.50 | 13.776 | 114.819 | C:\iotest.dat (5120MB)

7 | 5169152 | 631 | 0.16 | 21.03 | 18.774 | 133.842 | C:\iotest.dat (5120MB)

-----------------------------------------------------------------------------------------------------

total: 43614208 | 5324 | 1.39 | 177.46 | 14.486 | 117.836

Write IO

thread | bytes | I/Os | MB/s | I/O per s | AvgLat | LatStdDev | file

-----------------------------------------------------------------------------------------------------

0 | 1867776 | 228 | 0.06 | 7.60 | 487.168 | 493.073 | C:\iotest.dat (5120MB)

1 | 1941504 | 237 | 0.06 | 7.90 | 464.425 | 492.295 | C:\iotest.dat (5120MB)

2 | 1851392 | 226 | 0.06 | 7.53 | 500.177 | 493.215 | C:\iotest.dat (5120MB)

3 | 1769472 | 216 | 0.06 | 7.20 | 487.016 | 492.672 | C:\iotest.dat (5120MB)

4 | 1843200 | 225 | 0.06 | 7.50 | 480.479 | 492.992 | C:\iotest.dat (5120MB)

5 | 1826816 | 223 | 0.06 | 7.43 | 507.046 | 492.908 | C:\iotest.dat (5120MB)

6 | 1761280 | 215 | 0.06 | 7.17 | 516.741 | 492.909 | C:\iotest.dat (5120MB)

7 | 1826816 | 223 | 0.06 | 7.43 | 484.961 | 492.556 | C:\iotest.dat (5120MB)

-----------------------------------------------------------------------------------------------------

As seen from the results above there is a significant difference in iops and latency between the two containers.

The other option is to throttle based on maximum bandwidth per second - for example based on 5mbps:

docker run -it --name windowscorebandwdith --io-maxbandwidth=5m microsoft/windowsservercore cmd.exe

Installing / configuring Windows Server Containers (Docker) on Server 2016 Core

Docker and Microsoft formed an agreement back in 2014 to provide Docker technology to be integrated into Windows Server 2016.

Forenote: If you are running the host machine under ESXI you should ensure that you create a port group with promiscuous mode enabled in order to get Docker networking running in transparent mode!

Before enabling Docker we should ensure that the host system is up to date by running:

cmd.exe

sconfig

and select 'option 6'

or if you are on a non-core version:

wuauclt.exe /updatenow

restart the system and install the Container role with:

powershell.exe (Run as Administrator)

Enable-WindowsOptionalFeature -Online -FeatureName Containers

Install-Module -Name DockerMsftProvider -Repository PSGallery -Force

and the Docker powershell module with:

Install-Package -Name docker -ProviderName DockerMsftProvider

and restart the system with:

Restart-Computer -Force

Open up powershell again once the server has rebooted and we will now download an pre-made image from the Microsoft Docker repository - we can then add customisation to this and roll our own specialised version:

Install-PackageProvider -Name ContainerImage -Force

and proceed by installing the 'NanoServer' (this is a stripped down version of Server 2016)

docker pull microsoft/windowsservercore

or

Install-ContainerImage -Name WindowsServerCore

I prefer to use the native 'docker' commands since they can be applied to other operating systems as well.

And review our docker images with:

docker images

We can then run the container with:

docker run microsoft/windowsservercore

You'll notice the container will bomb out prety much straight away - this is because it simply loads cmd.exe and then quits. We need to specify the '-it' switch to run it in interactive mode - for example:

docker run -it --name windowscore microsoft/windowsservercore cmd.exe

Something better would be:

docker run -it --cpus 2 --memory 4G --network=<network-id> --name <container-name> --expose=<port-to-expose> -h <container-hostname> microsoft/windowsservercore cmd.exe

and a separate powershell window and verify it's running with:

docker ps

If all went well you should be presented with the command prompt (issue 'hostname' to verify the computer name) - now type exit and you will be dropped back powershell.

The docker container status is now classed as 'stopped' - in order to see all containers (including running and not running) issue:

docker ps -a

We can then either start the container again with:

docker start windowscore

or delete it with:

docker stop windowscore

docker rm windowscore

or attach to it with:

docker attach windowscore

You can also copy files from the Docker host to a container - for example:

docker cp C:\program.exe <container-name>:C:\Programs

Forenote: If you are running the host machine under ESXI you should ensure that you create a port group with promiscuous mode enabled in order to get Docker networking running in transparent mode!

Before enabling Docker we should ensure that the host system is up to date by running:

cmd.exe

sconfig

and select 'option 6'

or if you are on a non-core version:

wuauclt.exe /updatenow

restart the system and install the Container role with:

powershell.exe (Run as Administrator)

Enable-WindowsOptionalFeature -Online -FeatureName Containers

Install-Module -Name DockerMsftProvider -Repository PSGallery -Force

and the Docker powershell module with:

Install-Package -Name docker -ProviderName DockerMsftProvider

and restart the system with:

Restart-Computer -Force

Open up powershell again once the server has rebooted and we will now download an pre-made image from the Microsoft Docker repository - we can then add customisation to this and roll our own specialised version:

Install-PackageProvider -Name ContainerImage -Force

and proceed by installing the 'NanoServer' (this is a stripped down version of Server 2016)

docker pull microsoft/windowsservercore

or

Install-ContainerImage -Name WindowsServerCore

I prefer to use the native 'docker' commands since they can be applied to other operating systems as well.

And review our docker images with:

docker images

We can then run the container with:

docker run microsoft/windowsservercore

You'll notice the container will bomb out prety much straight away - this is because it simply loads cmd.exe and then quits. We need to specify the '-it' switch to run it in interactive mode - for example:

docker run -it --name windowscore microsoft/windowsservercore cmd.exe

Something better would be:

docker run -it --cpus 2 --memory 4G --network=<network-id> --name <container-name> --expose=<port-to-expose> -h <container-hostname> microsoft/windowsservercore cmd.exe

and a separate powershell window and verify it's running with:

docker ps

If all went well you should be presented with the command prompt (issue 'hostname' to verify the computer name) - now type exit and you will be dropped back powershell.

The docker container status is now classed as 'stopped' - in order to see all containers (including running and not running) issue:

docker ps -a

We can then either start the container again with:

docker start windowscore

or delete it with:

docker stop windowscore

docker rm windowscore

or attach to it with:

docker attach windowscore

You can also copy files from the Docker host to a container - for example:

docker cp C:\program.exe <container-name>:C:\Programs

Thursday, 6 July 2017

Creating and restoring a block level backup of a disk with dd and par2

Let's firstly take an image of the disk / partition we wish to backup by piping it into a gzip archive:

dd if=/dev/sda1 bs=64K | gzip -9 -c > /tmp/backup.img.gz; sync

For parity we need to ensure par2 is installed with:

sudo yum -y par2cmdline

cd /tmp

par2 create backup.img.gz

Which by default will provide 5% redundancy and a recovery block count of 100.

We could alternativley use a custom level with:

par2 create -s500000 -r10 backup.img.gz

Where the recovery is set at 10% with a block size of 500KB.

We can then restore the partition with:

gzip -dc /tmp/backup.img.gz | dd of=/dev/sda1; sync

The 'd' switch instructs gzip to decompress file archive, while the 'c' switch instructs gzip to write the output to stdout so we can pipe it into dd for processing.

dd if=/dev/sda1 bs=64K | gzip -9 -c > /tmp/backup.img.gz; sync

For parity we need to ensure par2 is installed with:

sudo yum -y par2cmdline

cd /tmp

par2 create backup.img.gz

Which by default will provide 5% redundancy and a recovery block count of 100.

We could alternativley use a custom level with:

par2 create -s500000 -r10 backup.img.gz

Where the recovery is set at 10% with a block size of 500KB.

We can then restore the partition with:

gzip -dc /tmp/backup.img.gz | dd of=/dev/sda1; sync

The 'd' switch instructs gzip to decompress file archive, while the 'c' switch instructs gzip to write the output to stdout so we can pipe it into dd for processing.

Tuesday, 4 July 2017

Snort: Installing and configuring pulledpork

Pulledpork is a utility written perl that provides automation of snort rule updates.

Let's firstly grab a copy of the latest version of pulledpork from official repo on Github:

cd /tmp

git clone https://github.com/shirkdog/pulledpork.git

cd pulledpork

cp pulledpork.pl /usr/local/bin/

chmod +x /usr/local/bin/pulledpork.pl

We'll also need to install perl and few of it's packages are installed:

sudo yum -y install perl cpan

sudo cpanm LWP ExtUtils::CBuilder Path::Class Crypt::SSLeay Sys::Syslog Archive::Tar

We can now copy all of the pulled pork config files to our snort directory with:

cp etc/* /etc/snort/

and then edit our pulledpork.conf file as follows:

rule_url=https://www.snort.org/reg-rules/|snortrules-snapshot.tar.gz|<your-oink-code-here>

rule_path=/etc/snort/rules/snort.rules

local_rules=/etc/snort/rules/local.rules

sid_msg=/etc/snort/sid-msg.map

sorule_path=/usr/local/lib/snort_dynamicrules/

snort_path=/usr/sbin/snort

config_path=/etc/snort/snort.conf

distro=RHEL-6-0

and then perform a test run with:

/usr/local/bin/pulledpork.pl -c /etc/snort/pulledpork.conf -T -H

Finally we can create a cron job to perform this every week (on Sunday):

0 0 * * 0 /usr/local/bin/pulledpork.pl -c /etc/snort/pulledpork.conf -T -H && /usr/sbin/service snort restart

I also found that there were many sensitive alerts such as 'stream5: TCP Small Segment Threshold Exceeded' - we can use the 'disablesid.conf' file to ensure pulledpork does not enable these rules:

sudo vi /etc/snort/disablesid.conf

and add:

119:19 # http_inspect: LONG HEADER

123:8 # frag3: Fragmentation overlap

128:4 # ssh: Protocol mismatch

129:4 # stream5: TCP Timestamp is outside of PAWS window

129:5 # stream5: Bad segment, overlap adjusted size less than/equal 0

129:7 # stream5: Limit on number of overlapping TCP packets reached

129:12 # stream5: TCP Small Segment Threshold Exceeded

129:15 # stream5: Reset outside window

Credit to Stephen Hurst for the above rules.

Re-download the ruleset again (you might have to delete the existing ruleset file in /tmp firstly:

rm snortrules-snapshot-2990.tar.gz*

sudo /usr/local/bin/pulledpork.pl -c /etc/snort/pulledpork.conf -T -H && /usr/sbin/service snort restart

Let's firstly grab a copy of the latest version of pulledpork from official repo on Github:

cd /tmp

git clone https://github.com/shirkdog/pulledpork.git

cd pulledpork

cp pulledpork.pl /usr/local/bin/

chmod +x /usr/local/bin/pulledpork.pl

We'll also need to install perl and few of it's packages are installed:

sudo yum -y install perl cpan

sudo cpanm LWP ExtUtils::CBuilder Path::Class Crypt::SSLeay Sys::Syslog Archive::Tar

We can now copy all of the pulled pork config files to our snort directory with:

cp etc/* /etc/snort/

and then edit our pulledpork.conf file as follows:

rule_url=https://www.snort.org/reg-rules/|snortrules-snapshot.tar.gz|<your-oink-code-here>

rule_path=/etc/snort/rules/snort.rules

local_rules=/etc/snort/rules/local.rules

sid_msg=/etc/snort/sid-msg.map

sorule_path=/usr/local/lib/snort_dynamicrules/

snort_path=/usr/sbin/snort

config_path=/etc/snort/snort.conf

distro=RHEL-6-0

and then perform a test run with:

/usr/local/bin/pulledpork.pl -c /etc/snort/pulledpork.conf -T -H

Finally we can create a cron job to perform this every week (on Sunday):

0 0 * * 0 /usr/local/bin/pulledpork.pl -c /etc/snort/pulledpork.conf -T -H && /usr/sbin/service snort restart

I also found that there were many sensitive alerts such as 'stream5: TCP Small Segment Threshold Exceeded' - we can use the 'disablesid.conf' file to ensure pulledpork does not enable these rules:

sudo vi /etc/snort/disablesid.conf

and add:

119:19 # http_inspect: LONG HEADER

123:8 # frag3: Fragmentation overlap

128:4 # ssh: Protocol mismatch

129:4 # stream5: TCP Timestamp is outside of PAWS window

129:5 # stream5: Bad segment, overlap adjusted size less than/equal 0

129:7 # stream5: Limit on number of overlapping TCP packets reached

129:12 # stream5: TCP Small Segment Threshold Exceeded

129:15 # stream5: Reset outside window

Credit to Stephen Hurst for the above rules.

Re-download the ruleset again (you might have to delete the existing ruleset file in /tmp firstly:

rm snortrules-snapshot-2990.tar.gz*

sudo /usr/local/bin/pulledpork.pl -c /etc/snort/pulledpork.conf -T -H && /usr/sbin/service snort restart